Everyone loves fast websites, it doesn’t matter if you’re a casual internet user or a power user – website performance matters. Research by Google, Microsoft, Yahoo! and many others have shown that fast websites have better user metrics, whether that be converting users into customers, performing more searches, higher usage patterns, increased order values and more. In addition to it providing benefits across the board, Google announced in April 2010 that website performance was now a ranking factor, such that slow loading websites could be ranked lower in the search results for a given query or conversely that fast websites could be ranked higher.

A Brief History

I live in Queensland, Australia; specifically on the sunny Gold Coast.

Since I started my personal blog back in 2004, I’ve always wanted my hosting to be fast for me personally. My desire for speed wasn’t born out of some crackpot web performance mantra, it was far simpler than that – I hate slow email. Having my web hosting in Australia guaranteed me that my personal email was going to be lightning fast and as a happy by product, my website screamed along as well.

In November 2012 I decided that I’d attempt to simplify my life, go hunting for a new web host that provided some additional features I was looking for and consolidate some of my hosting accounts. I still needed the bread and butter PHP and MySQL for WordPress but wanted some more options for some smaller projects I wanted to dabble with this year, so I added into the mix:

- PostgreSQL

- Python

- Django

- Ruby

- Rails

- SSH access

- Large storage limits

- No limits on the number of websites I could host

- Reasonably priced

I begrudgingly accepted the fact that I’d probably end up using a United States web host and had to build a bridge over my soon to be 200ms+ ping. After a moderate amount of research, I signed up with Webfaction who tick all of the above boxes.

Crawling

Google is a global business with local websites such as www.google.com.au or www.google.de in most countries around the world. They service their massive online footprint by having dozens of data centers spread around the world. Users from different parts of the world tend to access Google services via their nearest data center to improve performance.

What a lot of people don’t realise is that despite the fact that Google have data centers around the world, Google’s web crawled named Googlebot, crawls the internet only from the data centers located in the United States of America.

For websites that are hosted in the far reaches of the world, this means it takes more time to crawl a web page compared to a web page hosted in the US due to the vast distances, network switching delays and the obvious limit of the speed of light through fibre optic cables. To illustrate that a little more clearly, the below table shows ping times from around the world to my Webfaction hosting located in Dallas, TX:

| Dallas, U.S.A. | 3ms |

|---|---|

| Florida, U.S.A. | 32ms |

| Montreal, Canada | 53ms |

| Chicago, U.S.A. | 58ms |

| Amsterdam, Netherlands | 114ms |

| London, United Kingdom | 117ms |

| Groningen, Netherlands | 133ms |

| Belgrade, Serbia | 150ms |

| Cairo, Egypt | 165ms |

| Sydney, Australia | 195ms |

| Athens, Greece | 199ms |

| Bangkok, Thailand | 257ms |

| Hangzhou, China | 267ms |

| New Delhi, India | 318ms |

| Hong Kong, China | 361ms |

Based on the table above, it is clear that moving to US web hosting was going to have a significant impact on my load time as far as Googlebot was concerned.

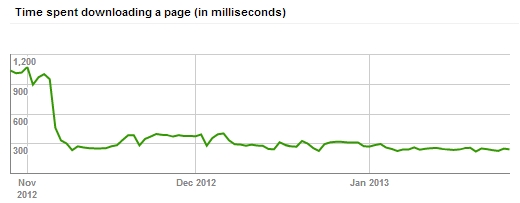

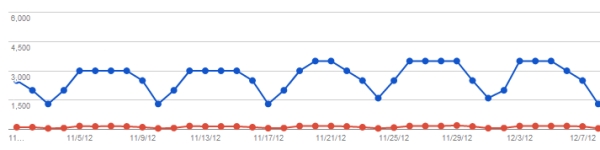

While still using my Australian web hosting, Google Webmaster Tools was reporting an average crawl time of around 900-1000ms, which you can see reflected on the left of the graph below and after the move to Webfaction it is now averaging around 300ms.

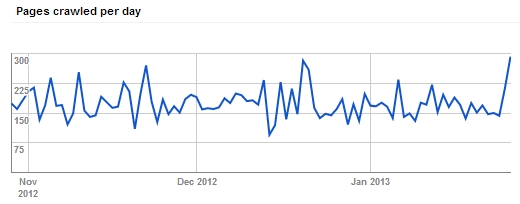

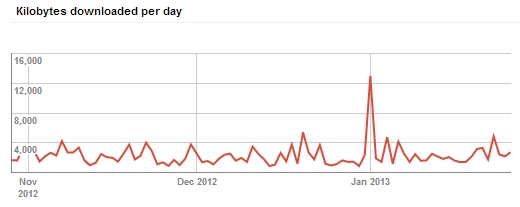

The next two graphs also taken from the Crawl Stats section of Google Webmaster Tools, show the number of pages crawled per day and the number of kilobytes downloaded per day respectively.

Google crawl the internet in descending PageRank order, which means the most important websites are crawled the most regularly and most comprehensively, while the least important are crawled less frequently and less comprehensively. Google prioritise their web crawl efforts because, although they have enormous amounts of computing power, it is still a finite resource that they need to consume judiciously.

By assigning a crawl budget to a website based on the perceived importance of each website, it provides Google a mechanism to spend an appropriate amount of resource crawling different sites. Most importantly for Google, it limits the amount of time they will spend crawling a less important websites with very large volumes of content, which might otherwise consume more than their fair share of resources. In simple terms, Google is willing to spend a fixed amount of resource crawling a website such that they could crawl 100 slow pages or 200 pages that loaded in half the time.

It’s been reported several times before that improving the load time of a website will have an impact on the number of pages that Google will crawl per day. Interestingly that isn’t reflected in graphs above at all. I’d speculate that since my personal blog has a PageRank 4 on the home page, that provides ample crawl budget for the approximately 625 blog posts I’ve published over the years. While reducing the load time of a site would normally help, it didn’t have an impact on my personal blog because it isn’t suffering from crawling and indexing problems.

Indexing

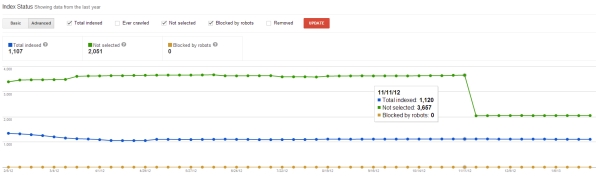

Google announced in July 2012 a new report in Google Webmaster Tools named Index Status. The default view in the report shows the number of indexed URLs over time, however the Advanced tab shows additional detail that can highlight serious issues with a websites health at a glance such as the number of URLs blocked by robots.txt.

One particular attribute of the Advanced report is a time series of URLs named Not Selected, which are URLs that Google knows about on a given website but that aren’t being returned in the search results as a result of them being substantially similar to other URLs (duplicate content), have been redirected or canonicalised to another URL.

The graph above shows a huge reduction in the number of Not Selected URLs on the 11/11/2012, which was the week that I changed my hosting to Webfaction. It should be noted that I didn’t change anything when I moved my hosting, it was a simple backup, restore operation. That week Google Webmaster Tools reported nearly 1000 URL were removed out of Not Selected.

I’m a little confused as to what to make of that since the Total Indexed line in blue above didn’t increase at all. Is it possible that Google had indexed URLs as part of the Total Indexed which weren’t being returned for other reasons and somehow by improving the performance of my website it has meant that they aren’t part of Not Selected anymore?

While I’m grateful that Google Webmaster Tools now provides the Index Status report as it does provide some insight into how Google views a website, it could contain far more actionable data. For example, to help with debugging it would be great if you could download a list of URLs that Google is blocked from crawling via robots.txt or to download the list of URLs sitting within Not Selected.

Ranking

Below is a graph from the Search Queries report in Google Webmaster Tools from the start of November 2012. I changed my hosting on the 4 November and as mentioned in the Indexing section above, there was a significant change in the number of Not Selected URLs on 11 November.

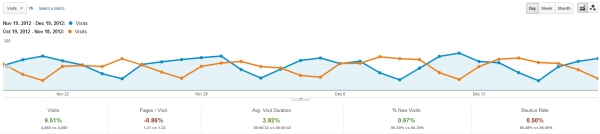

It is subtle but the blue line in the graph below indicates a small uplift in impressions from around 19 November. Unfortunately I don’t have a screenshot showing the trend from before the start of November, however it consistently showed the same curves as you can see below before the small increase in impressions around 19 November.

My personal blog doesn’t take a lot of traffic per month at around 4000-4500 visits, so it isn’t going to melt any servers. Though I thought it’d be useful to see if any of the above translated through to Google Analytics. The graph below shows a comparison of organic search referrals 30 days from 19 November lining up against the lift in impressions above against the prior 30 day window. Over that time span organic visits to my personal blog grew by around 9.5% or about 400 visits, which is nothing to sneeze at. Comparatively, the same time span for the last three years has fallen in traffic by 6%-8% each year – highlighting that a 9% growth is really quite good.

Opportunity

One small personal blog does not make a forgone conclusion but it does begin to get the cogs turning regarding opportunity. For businesses that are located in the USA, they are likely going to naturally use US web hosting and therefore latency isn’t really an issue.

However for businesses located large distances from the US, an opportunity could exist to get a small boost by either switching to US hosting or subscribing to a content delivery network with US points of presence.

A word of caution, Google uses a variety of factors to determine who or what audience in the world a websites content best services. If your website uses a country specific domain such as a .com.au, that website will automatically be more relevant to users searching from within Australia. If however you use a global top level domain such as a .com, Google uses a variety of factors to determine what audience around the world best fits that content. Among those factors is the location of the web hosting, such that a .com using UK web hosting would be more relevant to United Kingdom users. If your business primarily targets a country such as Australia and uses a gTLD, Google Webmaster Tools provides a mechanism to apply geotargeting to the website. By applying geotargeting to a gTLD and a small number of special case ccTLD, it will cause Google to associate that website to the geographic area of the world specified as if it was using the associated country specific domain.